TRANSCRIPT: Dark Side of AI – How Hackers use AI & Deepfakes: Mark T. Hofmann

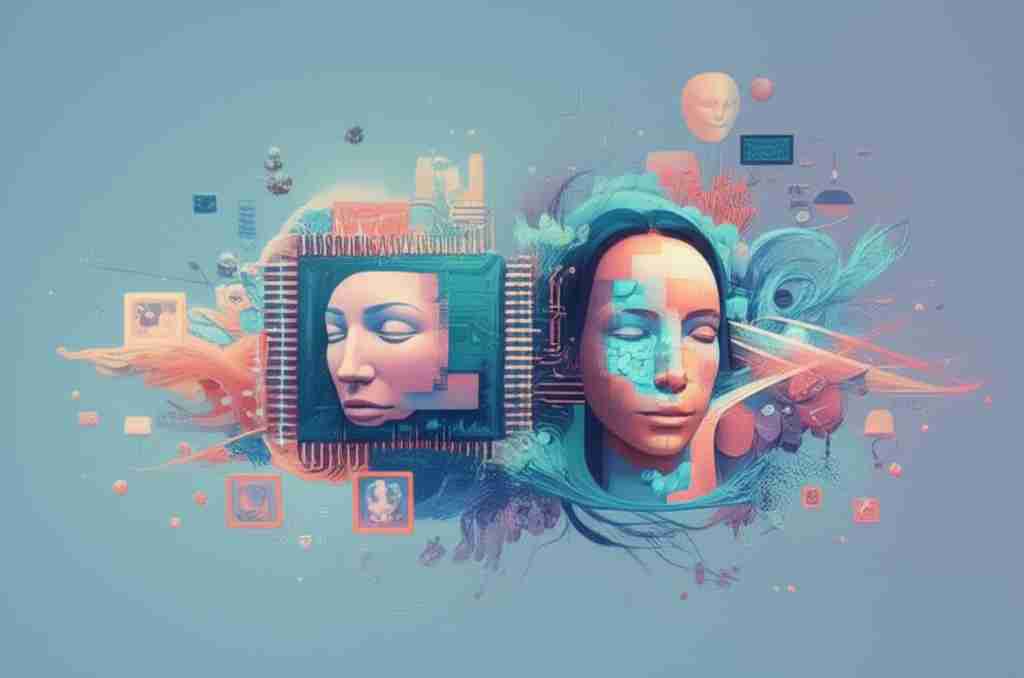

Introduction: The Double-Edged Sword of Artificial Intelligence

Artificial intelligence (AI) is revolutionizing industries at lightning speed, bringing monumental benefits—and equally monumental risks. Just as a kitchen knife can prepare a meal or cause harm, AI is neutral by nature; its ethical value is defined by its user. In his compelling talk, “Dark Side of AI – How Hackers use AI & Deepfakes,” criminal analyst and business psychologist Mark T. Hofmann explores the ever-advancing threats posed by hackers exploiting AI and deepfake technologies. He draws our attention to the darker side of progress, where cybercriminals harness the same AI tools powering innovation to launch cyberattacks, manipulate human psychology, and undermine our sense of reality. This blog post, grounded in Hofmann’s insights and validated by scientific research, offers an authoritative exploration of how hackers use AI and deepfakes—and how we can protect ourselves in an era where seeing and hearing may no longer lead to believing.

Understanding the Cybercrime Economy: AI as a Catalyst for Crime

Cinematic portrayals often trivialize hackers as isolated teenagers in dark rooms. Reality, as Hofmann uncovers, is far more sophisticated. The cybercrime industry is a trillion-dollar enterprise, projected to cost the world over $10 trillion annually. If cybercrime were a country, it would rank as the third largest economy on the globe, following only the United States and China.

- Ransomware dominates the business model—hackers encrypt organizational files, then demand payment in exchange for access restoration. Ransoms range from $2,000 for individuals to $240 million for large corporations.

- Criminal organizations now mimic legitimate businesses: they offer customer support to their victims, recruit technical talent, and even operate affiliate programs where hackers earn commissions by spreading malicious software.

- Thanks to AI, technical barriers to entry are crumbling. Anyone with a laptop, an internet connection, and malicious intent can potentially launch sophisticated attacks, no programming skills required.

- Motivation extends beyond monetary gain, with hackers driven by thrill-seeking, defiance of authority, ego, and dark humor.

With AI’s transformative capabilities, the scale and accessibility of cybercrime continue to grow—heralding a new era in which even greater vigilance is essential.

The Methods: How Hackers Exploit AI & Deepfakes

Hofmann identifies four escalating “levels of darkness” in how hackers misuse AI:

- Psychological Manipulation (Reverse Psychology Tactics): Hackers exploit human tendencies to divulge information or click malicious links, often posing as legitimate parties to lower defenses.

- Prompt Jailbreaking: By crafting complex prompts, hackers manipulate AI models like ChatGPT to bypass built-in safeguards and generate harmful content, such as malware code or phishing templates.

- Custom Malicious AI Models: Beyond misusing public models, hackers are now creating their own, specifically designed to generate malware, flawless phishing emails, and even coordinate attacks autonomously.

- Autonomous AI-driven Cyberattacks: Hofmann warns that, in the future, AI might become capable of automating entire cybercrime operations—from identifying targets on the dark web to launching campaigns and reporting results—potentially without human intervention.

As social engineering becomes ever-more personalized, traditional cues—such as typos or generic greetings—are being eliminated by AI, making attacks alarmingly convincing.

A study conducted at SingjuPost highlighted the growing sophistication of AI-driven cyberattacks and deepfakes. The research found that hackers use AI not only to craft highly realistic phishing attempts and social engineering schemes but also to develop custom malware and produce near-indistinguishable fake audio and video. These trends—substantiated through interviews with hackers and forensic analysis—underscore how crucial it is for organizations and individuals to remain vigilant against threats that no longer rely on technical skill alone, but rather leverage the psychological vulnerabilities of their targets.

Deepfakes: The Rise of AI-powered Fraud and Misinformation

One of the most chilling consequences of AI’s advancement is the ease with which deepfakes—audio and visual forgeries generated by sophisticated neural networks—can spread disinformation and commit fraud:

- Only a single high-resolution image is now sufficient to create a lifelike video of anyone, complete with mimicked facial expressions and gestures.

- For voice cloning, just 15–30 seconds of recorded speech may suffice to convincingly replicate someone’s voice—far less than the hours once required.

- Hackers have used deepfakes to impersonate CEOs, fraudulently directing finance teams to transfer millions of dollars, or to trick elderly relatives into wiring money under the guise of a distressed grandchild.

- Deepfakes are already being used for political disinformation, such as fabricated videos urging battlefield surrenders or undermining public trust in legitimate leaders.

- Even romantic scams are now supercharged by AI, generating believable yet entirely synthetic personas that deceive and exploit the unsuspecting.

Beyond fraud, deepfakes threaten the integrity of evidence itself. Hofmann quips that soon, anyone caught on camera could simply claim, “It’s not me—it’s a deepfake.” This erosion of trust profoundly challenges the justice system, journalism, and society at large.

Psychology of Human Error and Social Engineering

While AI and deepfake technology accelerate threat sophistication, most successful cyberattacks still trace their roots to human error. Hackers primarily exploit human psychology, not technical vulnerability:

- People are tricked into clicking malicious links or opening infected attachments, often under the guise of urgency or authority (“This is your CEO—respond immediately!”).

- Lost USB drives labeled “Secret” are intentionally planted in public places, triggering curiosity and leading users to unwittingly compromise company networks.

- Imposters exploit emotional triggers—fear, love, or obligation—to bypass rational scrutiny, convincing family members, employees, or even entire organizations to share confidential information or part with cash.

- Remote work and ubiquitous online communication increase the opportunities for such deception.

Attackers combine elements such as authority, time pressure, emotional appeals, and unexpected exceptions to bypass victims’ defenses. AI augments these deceptions, making them appear even more authentic and urgent.

Defending Against the Dark Side: Practical Steps for Individuals and Organizations

While the threats are formidable, AI can also be part of the defense. Hofmann advocates for a “human firewall”—empowering people with awareness, skepticism, and simple but effective security habits:

- Verify, Don’t Trust Blindly: Never act on urgent demands or unexpected requests involving money or sensitive information without verification. Call the official number or use a second communication channel.

- Create Family and Team Passwords: Agree on a “safe word” or password within your circle. If contacted by someone claiming to be a loved one in distress, request the pre-shared code or ask security questions only the real person could answer.

- Educate and Entertain: Cybersecurity doesn’t only concern the technically minded. Training should be engaging and relevant for everyone—because most people at risk are not cybersecurity experts.

- Be Skeptical of All Media: Understand that photos, videos, and audio can be forged. Don’t accept anything at face value—especially if it involves unusual requests or damaging information.

- Limit Sharing of Personal Data: The less data available about you online, the harder it is for AI to clone your likeness and fool your contacts.

- Keep Devices and Software Updated: While much of the threat is psychological, technical defenses—patching, antivirus, VPN use—remain basic but critical barriers.

Ultimately, Hofmann emphasizes the need to make cybersecurity “great again” by reaching those who aren’t already interested through engaging and relatable education. The goal is to create a resilient human firewall as technology races ahead.

Conclusion: Maximizing Opportunity, Minimizing Threat

AI marks the dawn of unprecedented opportunity—but the very power that fuels progress can also be weaponized. As Mark T. Hofmann’s analysis makes clear, cybercriminals are evolving, using AI and deepfakes to bypass technical and psychological defenses alike. Trust in “seeing” and “hearing” is fast eroding, and mistakes made by even the most savvy users can have consequences of global proportion.

Yet, the greatest risk isn’t AI itself, but our failure to adapt. Vigilance, curiosity, and collective action are essential as we navigate this new era. AI is, above all, a tool—our challenge is to harness it responsibly while guarding against those who choose the dark side. Stay informed, stay critical, and make cybersecurity part of your everyday conversation. The future belongs to those who understand both sides of the AI coin.

About Us

At AI Automation Darwin, we believe in unlocking the positive potential of artificial intelligence. While the rise of AI and deepfakes brings new security challenges, our mission is to help local businesses harness AI safely and efficiently. We design automation solutions that streamline your workday and keep your operations resilient—so you can leverage technology for growth while staying aware of evolving digital risks.

About AI Automation Darwin

AI Automation Darwin helps local businesses save time, reduce admin, and grow faster using smart AI tools. We create affordable automation solutions tailored for small and medium-sized businesses—making AI accessible for everything from customer enquiries and bookings to document handling and marketing tasks.

What We Do

Our team builds custom AI assistants and automation workflows that streamline your daily operations without needing tech expertise. Whether you’re in trades, retail, healthcare, or professional services, we make it easy to boost efficiency with reliable, human-like AI agents that work 24/7.